Identifying Requirements Defects through Allocation and Traceability

The Airborne Low Frequency Sonar System (ALFS), is the next generation of dipping sonar system being developed for the U.S. Navy Helicopters. ALFS is being built by the Naval And Maritime System Engineering Center, which is part of the Hughes Aircraft Company located in Fullerton, CA. This project is in the Engineering Developmental Model (EDM) Phase and will be conducting its Operational Developmental Testing in 1996. The ALFS project is a Military Standard 2167A program requiring requirements tracking throughout the program’s life cycle. This paper will focus on how the Requirements Management task was accomplished and the lessons learned.

The Problem

After being assigned to the program for about six months, I was assigned the requirements management task for ALFS. This task had already been through several people prior to me. Prior to my assignment, ALFS had established an Excel spreadsheet as the program’s requirement tracking tool. My first task, verify this Excel requirements spreadsheet to a new draft release of the System Segment Specification (SSS). During this task, it became apparent we had serious requirements problems. About 20-30% of the requirements contained in our System Segment Specification (SSS) were missing from the Excel spreadsheet. These Excel problems were typical problems mostly resulting because of the limitation of cell size and engineers abbreviating the requirement text to make it fit.

These types of problems made it clear that a spreadsheet should not be used for requirement management. Also, accomplishing the tracking of requirements per 2167A using the Excel spreadsheet was very labor intensive. For instance, to produce a requirements tracking report for new releases of documents it was taking two engineers two weeks, and the report had numerous errors. After this reevaluation of the project management, my immediate mission was changed to locating a requirements management tool.

Find a Tool

Now that the need was identified, ALFS needed a requirements management tool as soon as possible. First, I determined a tool candidate list. Then, I called the vendors, getting capabilities, platform data, costs, and requesting demo copies. During this exercise, I also discovered the difference between system engineering design tools and requirements management tools. The program decided that a design tool was too expensive and had too long of a learning curve and opted for a Requirements Management tool.

The requirement tool had to have a parser because most of the documents were already produced or being generated. The ALFS documents were produced on Macintosh PCs. A half dozen requirement management tools were identified as possible candidates. The most critical requirements in selecting a tool were:

- parsing capability

- a tool easy to use

- quick availability

- phone support

- low cost

The tool selected was Document Director which met the requirements and had the added advantage that it was being used by our customer, NAVAIR. Document Director is produced by Compliance Automation Inc. located in Houston, Texas. Of all the tools I received demo disks for Document Director seemed to fit the best. It was easy to use, good user manual, and low cost. The user manual seemed to contain just about all the data I needed to get started. Compliance Automation also supplied a 30-day trial license so, we could try the tool to ensure it was a fit. This allowed us to get started the next day. Compliance Federal Expressed the software overnight. After reading the manual and trying the tool for a day, I made the recommendation to my boss. My supervisor took Document Director home over Christmas to see what he thought. After several phone calls and discussions, he agreed. So, Document Director would be the Requirements Management Tool for the ALFS Program.

Requirements Allocation

NAVAIR had already parsed a preliminary release of the ALFS System Segment Specification into Document Director. NAVAIR had linked the Request For Proposal to the SSS and identified the requirements by type, performance, Allocable, and Design requirements. We took advantage of NAVAIR work and built upon it, adding User Define Fields for each of the Computer Software Configuration Items (CSCIs) and Hardware Configuration Item (HWCIs) to the database for system allocation. This allocation method of using user-defined fields was started by the NAVAIR and we continued to keep the databases structured, to allow exchange of data between us.

Allocation reports were then given to the respective leads for the associated CSCI or HWCI. These reports could be customized for specific CSCIs or HWCIs depending on the need. These reports contained no irrelevant requirements, thereby easy to review and no wasted time by the reviewer. It was the lead’s responsibility to distribute and get these reports reviewed and back to me with any changes. Once the SSS allocation was completed, the next step was to parse the subordinate documents. The subordinate documents being Software and Hardware Requirement Specifications.

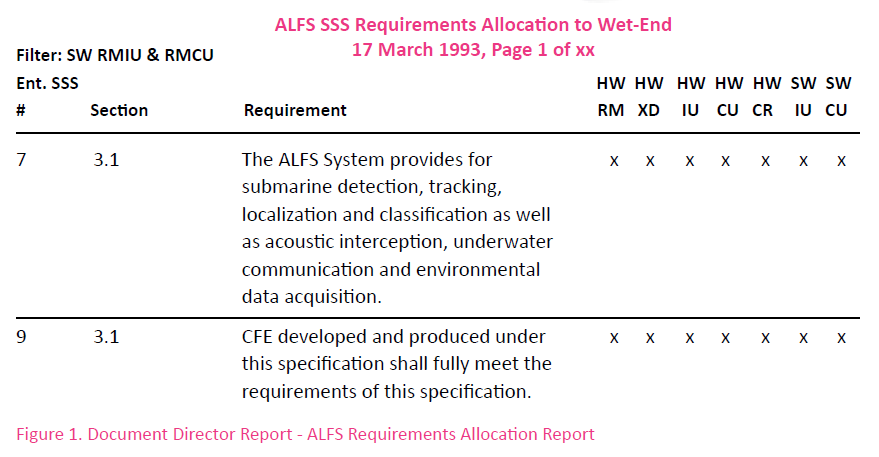

Figure 1 is a sample requirements allocation report.

Requirements Test Methods

The ALFS SSS document was required to document the test methods for each of the requirements. This was handled by adding user-defined fields for each of the test methods. These test method fields allowed SSS reports to be generated showing requirements by a specific test method. This was very useful during some trade-off analysis done for Design Verification Testing.

Figure 2 is a sample requirements to test method report.

Requirements Flowdown

The flowdown of requirements from the top level to the subordinate documents was accomplished by using Document Director two window feature. The SSS document is opened in one window and the Software Requirements Specification (SRS), i.e. subordinate documents, in the second window. The appropriate text is selected in each document, a key is pressed, and the link is made. Quality checks on the linking process were accomplished by running an orphan report. The orphan report locates all the children requirements in the SRS that have not been linked to a parent requirement (SSS). Orphan requirements can indicate work being performed which is out of scope thus, adding extra cost to the program. The end result is software being implemented with no driving requirement.

The requirement flowdown or linking was accomplished in the following manner. I would generate two reports for the lead person, the first being an SSS requirement report with only those requirements allocated to that specific CSCI or HWCI, and the second being a report of all the requirements contained in the respective SRS or Hardware Requirement Specification (HRS) with its respective requirements numbers.

The respective engineer would use these two reports to identify corresponding links by writing the associated requirement numbers on one report. Then the database was updated per these reports. This technique allowed several requirements flowdowns to be performed simultaneously. This was key to the schedule because ALFS was using a single users license and starting months behind in this task.

Lesson Learned

A more cost-effective way would be to use a network version, with multiple users, and generate the documents online with the tool. The tool should be implemented in the proposal phase.

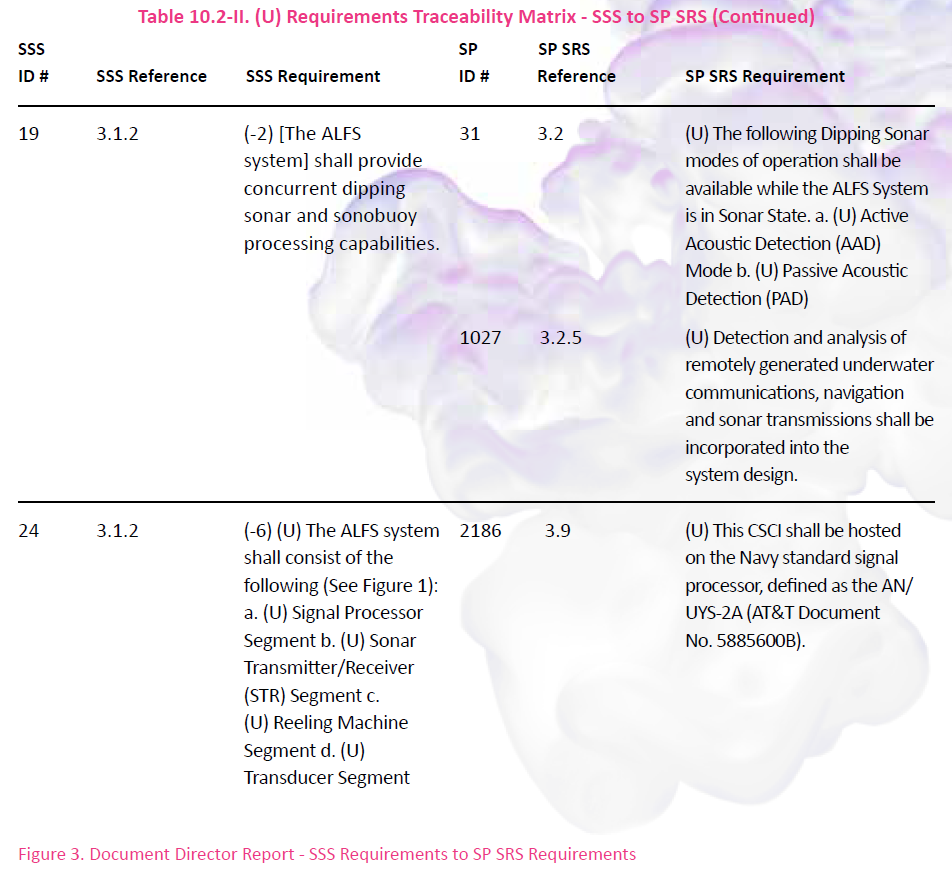

Figure 3 is a SSS requirements to SP SRS requirements, a flowdown of requirements report.

Checking Data

Two reports were very effective in checking data, Widow (childless requirements) and Orphan reports. Widow/childless reports find the requirements or entity which has no child link. The significance of childless requirements is that a requirement is not being implemented, or implemented incorrectly, or the requirement cannot be identified. Orphans are subordinate document requirements with no parent link. Orphans can indicate additional work being done that is not required (added cost). Another possibility in such a case may indicate a missing parent requirement. These two reports were run on all the documents at various times as a quality heck, throughout the program. These same checks (childless and orphans) could be used for SRSs to find requirements with no test case or Computer Software Unit (CSU). The requirement to test case and CSU relationships are explained in the following paragraphs. Document Director filter capability can find these types of problems (missing data), very effectively.

Requirements <–> CSU/CSC Tracking

Document Director was also used for the SRSs which had to have a traceability matrix showing requirements to CSU and CSC. ALFS used the subordinate documents already contained in the database and added a CSU/CSC file.

The SRS requirements were linked (parent to child relationship) to their respective CSU/CSC entity contained in the added CSU file. This seemed to be the most cost-effective way of doing business. The CSU file consisted of just a list, line after line of CSC numbers, with an associated CSU data contained in a User defined field. This configuration minimized the maintenance because of CSU/ Computer Software Component (CSC ) changes by only having to change one file (CSU file), one line for each change. one place. If requirements were changed to other CSU/CSC the associated links were broken and correct ones linked, accomplished with a stroke of a key.

Figure 4 is an example Document Director report of CSCs / CSUs to SP SRS requirements.

Requirements <–> Test Cases

The same requirements for CSU/CSC structure were implemented for test cases. The test case file was created like the CSU/CSC file, except that it contained test case numbers. This file was configured and linked the same way as the CSU/CSC file for the same benefits, reduced maintenance for changes. Change one test case number in the test case file, and your document director report has all the requirements with the correct test case number.

Both the CSU/CSC and test case reports were used as quality checks of this process. A requirement with no CSU/CSC or test case was easily identified by a blank box next to the requirement. This same check could be for the CSU/CSC or test case with no requirement.

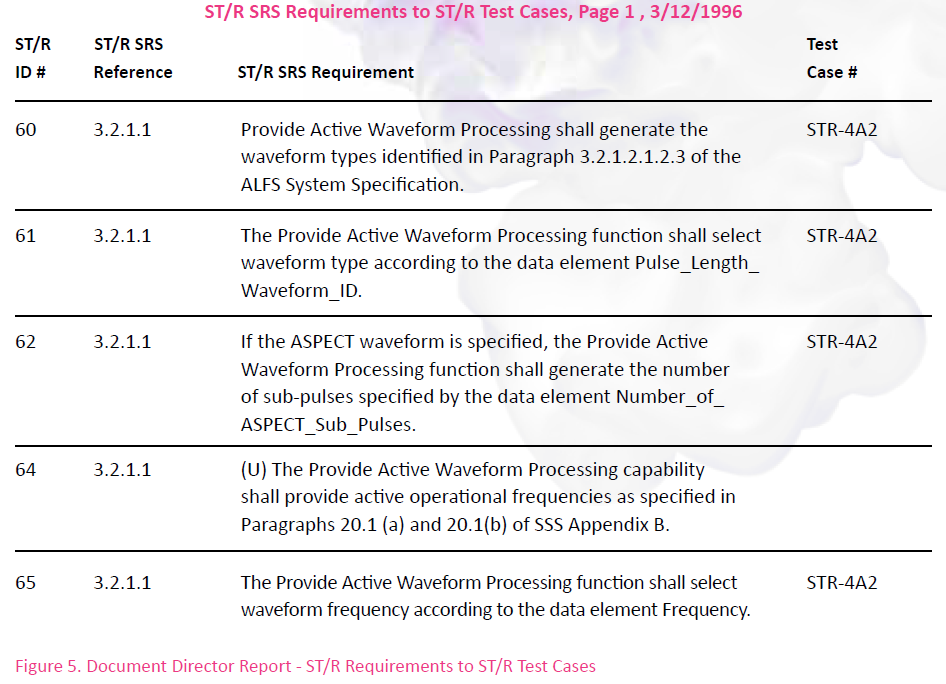

Figure 5 is an example of ST/R requirements to ST/R test case report.

Additional Benefits

Document Director was very useful in reviewing update releases of our subcontractor documents. These documents were not the best quality, change bars were inconsistent and did not indicate a specific change in a sentence. The change bars were on a paragraph basis indicating a change was made somewhere within. The review of these documents would have been very expensive, checking line by line, hopefully catching the changes in the new document. Document Director was very cost-effective in this effort. The original documents were already in the ALFS Document Director database. So, when updated specification arrives from our subcontractors, they were parsed into Document Director and a report generated. This report of updated document (requirements) would be compared to the requirements of the baseline document. These two reports were reviewed side by side in a fraction it would take to review the whole document. This process worked very well since our concern was missing and changed requirements.

The ALFS Document Director database was used by a related off-shoot program called Merlin. A dipping helicopter system for the UK. An interface box of ALFS is used on this program. Using Document Director with the existing database and the linking capability of Document Director the requirements to Merlin were identified quickly. This allowed the software group to identify unique CSU/CSCs and test cases which had to be addressed.

Configuration Control

Once the database was populated and the preliminary specifications released the next issue was configuration control of the database. I needed to ensure the electronic copies (released specs) at NAVAIR matched the database. I established the following process. The Specification Change Notices (SCNs) had to be released prior to being input into the Document Director data base. Then to assist in quality checks for input of these changes I established a configuration file. This file consisted of entities that were paragraphs of text by SCN number. These SCN paragraphs contained the description of the SCN, and the following data; SCN #, Date of SCN, Add, Modify, Change, and a Document field. Each SCN paragraph was linked to its associated entities or requirements /text that was changed in the document. A report was generated showing in the other documents, all categorized by SCN change. This configuration file and associated linked files provided a good quality checking mechanism to validate that the changes were incorporated into the master database. Configuration reports could be generated from this file and associated links.

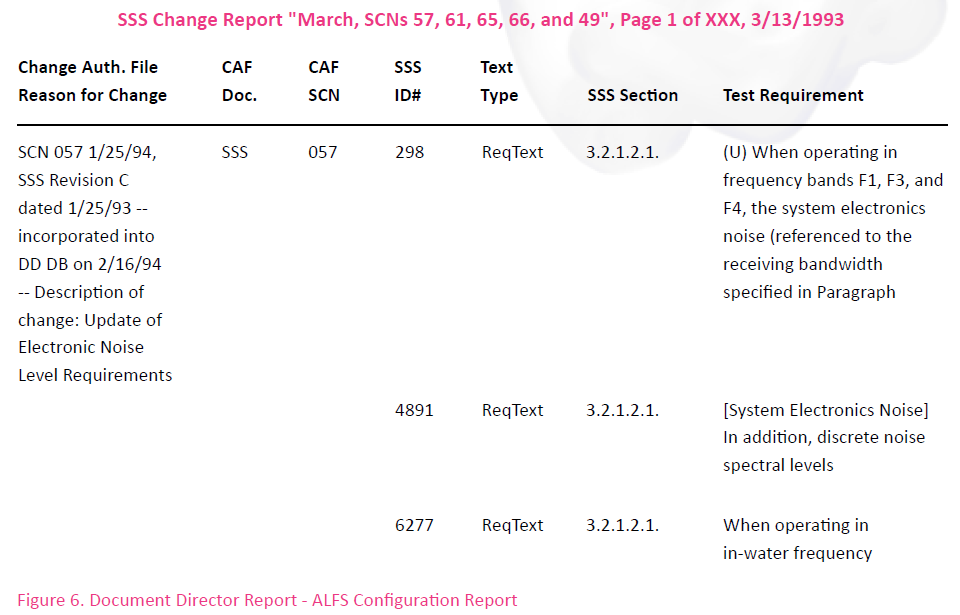

Figure 6 contains an ALFS Configuration Report. Some of the text in this report was cut off to ensure it would fit vertically in this report for readability.

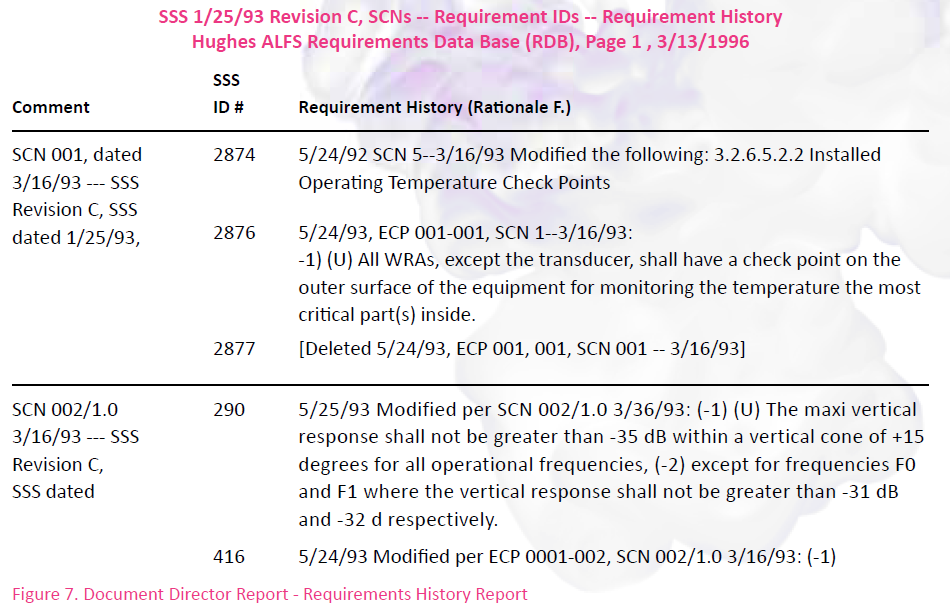

We used the Rationale fields for document change history. Since ALFS was not intending to use it for the design Rationale. As part of the SCN change process the current text (prior to change) was copied into the We used the Rationale fields for document change history. Since ALFS was not intending to use it for the design Rationale. As part of the SCN change process the current text (prior to change) was copied into the Rationale field. This provided the history of each requirement change, change after change.

Figure 7 contains an ALFS Requirement History Report, which uses the Rationale field.

Monthly Requirement Reports

ALFS being a 2167 program was required to provide monthly software reports on requirements changes to NAVAIR. The added, modified and deleted requirements had to be identified by specification. By using the add, modify, and delete fields in each of the document files these numbers were identified in minutes. The change status field were updated as part of the SCN process. Monthly this data was collected and the fields cleared for the next set of changes. Deleted requirements entities were never actually completely deleted, because once deleted, all records would be lost. Therefore, all the text would be deleted and rather replaced with, [deleted SCN #] to allow tracking.

Scope of ALFS Requirements Task

The ALFS Document Director database – from top level System Segment Specification (SSS) to the Software Requirements Specification (SRS) and Hardware Specifications (HWS) – contains a total of 4245 requirements. The SSS alone has 1702 requirements as of 1/25/93. The original ALFS Excel spreadsheet contained a total of 1402 requirements.

Closing

Tool implementation should be done as early as possible, RFP phase would be my recommendation. The capture of the design Rationale should be a requirement of the program management. The Rationale field is intended to capture the requirement and design Rationale for posterity. This can be a big aid in assisting new engineers to gain an understanding of certain nuances of the requirement/design.

This tool would be very effective in RFPs, grasping the reviewing requirements and allocating them to the teams quickly. A critical key is having the documents in electronic format ASAP to allow parsing. Bad requirements, (i.e. vague, not testable, etc.) requirements could be identified to customer for resolution prior to submitting the proposal.

The RFP and Proposal requirements could be linked, thus allowing a compliance matrix to be generated automatically. Quality checks could be done as the RFP is progressing, with links to comments, for everyone on the proposal team to review…

In closing, it’s my opinion that Document Director is a very cost-effective tool to use for requirements management process. The reasoning is; low-cost, easy to use, and (the most significant) excellent schema support from Compliance Automation Inc. Now Document Director has been replaced by an even better tool called Vital Link. After other tool failures I have been associated with, it was great to be associated with the success of using Document Director on the ALFS program.

About the Author

At the time this paper was written, Buddy Webb had worked at Hughes for thirteen years in the field of Anti-Submarine Warfare and Submarine Combat Systems. His experiences also included six years of Submarine Navy, Field Engineering with Librascope Division of the Singer Company, and the Bechtel Corporation Nuclear Startup Group. At publication, Buddy had used Document Director for three years to manage the Airborne Low Frequency Sonar Program (ALFS), and consulting to the Surface Search Radar (SSR) program.

Paper written by Buddy F. Webb, 1993.

Print or Save to PDF