This is the fourth post in a series I am writing to explore lessons I have learned about Data Migration. You can read my previous posts on the topic of Knowing Your Data here, here, and here.

We at ArgonDigital are always happy to discuss data migration with you and explore ideas about making your project go smoothly.

With the Knowing Your Data series, I started with a tactical approach. Now I’d like to zoom out and discuss the whole pattern and process of Data Migration. I have worked on three very large projects to help customers replace legacy systems: a digital rights system, a financial system, and an asset management system. Each project involved a heavy load of migration work, and each migration effort had distinct patterns and processes. However, it is possible to glean some general lessons from those experiences. These include:

- Data migration pattern: Extract, Transform, and Load

- Invest in a dedicated data migration team and technology

- Start migration early and plan for constant iteration

- Focus initially on process over accurate results

- Cleanse data as close to its current source as practical

Today I’m going to focus on the data extraction process in lesson 1 above.

Data Migration Pattern

Data migration involves three distinct activities, which you may hear described as “Extract, Transform, and Load” or ETL.

- Extract is about getting data out of the legacy system.

- Transform is about preparing data for the new system: mapping, breaking up or joining together source records, cleansing errant data, otherwise munging source data into the structures and formats required by the target system.

- Load is about putting data into the new system.

Each activity has a distinct flavor and cadence. Each may require unique expertise. Each may involve building or licensing tools and systems to facilitate the work. Each may involve complex processes. However, there are no standardized process flows for these activities because each project is unique. The successful teams I worked with were skilled at applying data migration best practices to their project’s distinct goals, constraints, processes, and tools.

Extract

Extract is the set of activities to get your data out of a legacy system to be replaced. It may also include pulling data from other involved systems that may continue to run in production. The general pattern is to select an extraction tool, design the extracts (the fields and the specific records you need), and manage the extracted data sets. How you will do each of these will depend on many factors which you can assess by answering these questions:

- How is data stored and accessed in current state?

- What is the plan for each source system involved? Is it to be deprecated immediately? Is it to remain in production? Is it to remain in production, only for a time?

- What volume of data across business data objects (BDOs) is to be migrated?

- How often does source data change?

- How much lead time does the team have for extraction activity?

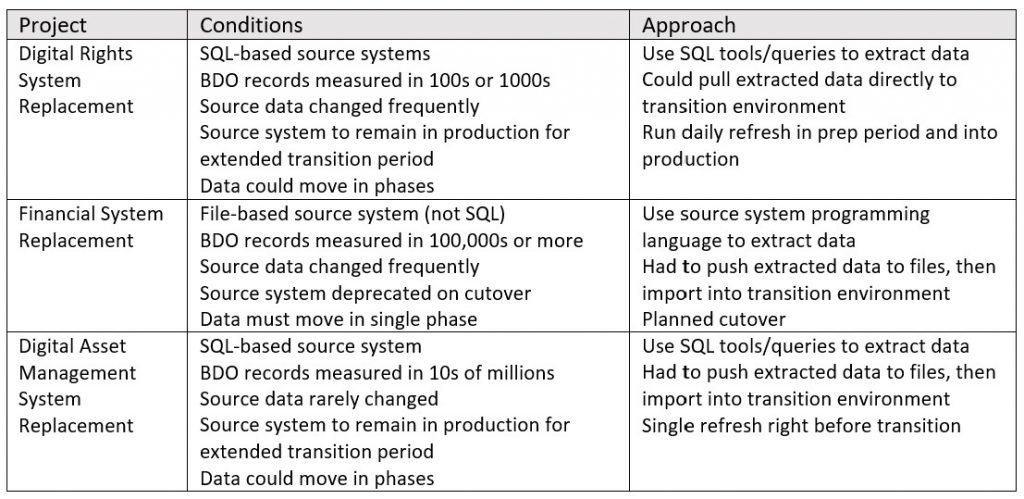

In the three large migration efforts I worked on, there has been enormous variation in the extract activity. I’ll review the characteristics of each.

Project 1 - Digital Rights

A large media company was replacing its home-grown digital rights systems with a new SaaS built and maintained by a vendor. The team designed extract activities according to key facts on the ground such as:

- The source systems ran on SQL-based relational database systems.

- The target system vendor did not offer a migration toolset but did offer a robust API for loading the data.

- The source systems would need to remain in production alongside the new target system for an extended transition period.

- The records for most future state Business Data Objects (BDOs) would need to be built from several source systems, meaning there would be significant transformation required.

- Source data for most current state BDOs changed frequently.

- Source data would require significant cleansing

Project 2 - Financial System

A large financial services company was replacing its obsolete lease accounting system with a new on-premises system built and maintained by a vendor. The team designed extract activities according to these facts on the ground:

- The source system ran on a file-based system that could not be accessed by external tools, so data would need to be exported rather than pulled.

- The target system vendor offered a migration toolset as well as an API for loading data.

- The source system would need to be deprecated immediately upon migration; the business would cut over to the target system at that time.

- All records for all in-scope Business Data Objects (BDOs) would need to be moved for that cut over.

- Moreover, the migrated data to be used in financial calculations would need to be accurate within tight tolerances compared to the old system’s calculations.

- Source data changed frequently.

- Source data would require some cleansing, but considerable transformation since the source and target systems stored similar data points very differently.

Project 3 - Digital Asset Management

A media company was replacing its in-house digital asset management system with a new cloud-based system built and maintained by a vendor. The team designed extract activities according to these project characteristics:

- Although the source system ran on a SQL-based relational database, data was often not well structured or governed.

- The target system vendor offered no migration toolset, and given the volume of records, recommended migration through file upload.

- The source systems would need to remain in production alongside the new target system for an extended transition period.

- Records for in-scope data could be moved in a series of migration phases.

- Source data was rarely changed, but the sheer volume of records made it necessary to track migrated records rigorously.

- Source data would require significant cleansing.

- Some Business Data Objects linked to asset records would not be available until a related system was deployed later.

Project Conditions Drive Approach

The conditions under which each team needed to plan the extract drove very different approaches to the migration process each designed. The table below summarizes conditions and implications on the extraction process:

I’ll pick up the story in my next article where I’ll discuss the data transformation process in the context of these three projects.